Why didn't you status?

Demystifying the public status process.

At GitHub we status fairly frequently (6 times in May so far — 18 times in April), especially compared to other providers. I often see questions from our customers (or support reps) about why we didn’t status for a particular issue or why their SLA is different from what appears on our status page.

Let’s take a minute to demystify this process a bit, although before I do it might be worth mentioning that we take availability (and outages) very seriously and I would hate for a post on statusing to sound like a bunch of excuses (it’s not — there is a continuous availability push at GitHub and we keep working to get better).

Now might also be a good time to note that views expressed here are my own and not those of my employer.

Here are some high level takeaways I talk about more below:

Statusing is based on the amount of customer impact.

SLAs are not the same as status page outages.

Not every customer is impacted by every outage.

Why didn’t you status?

I get this question a lot so here’s a few reasons why we might not have statused a particular issue for a customer.

The incident didn’t impact enough people.

This is a version of your 9’s not being my 9’s, but the unfortunate truth is that we do not status for every customer impacting incident. Sometimes we know about things and we still don’t status them. Why not?

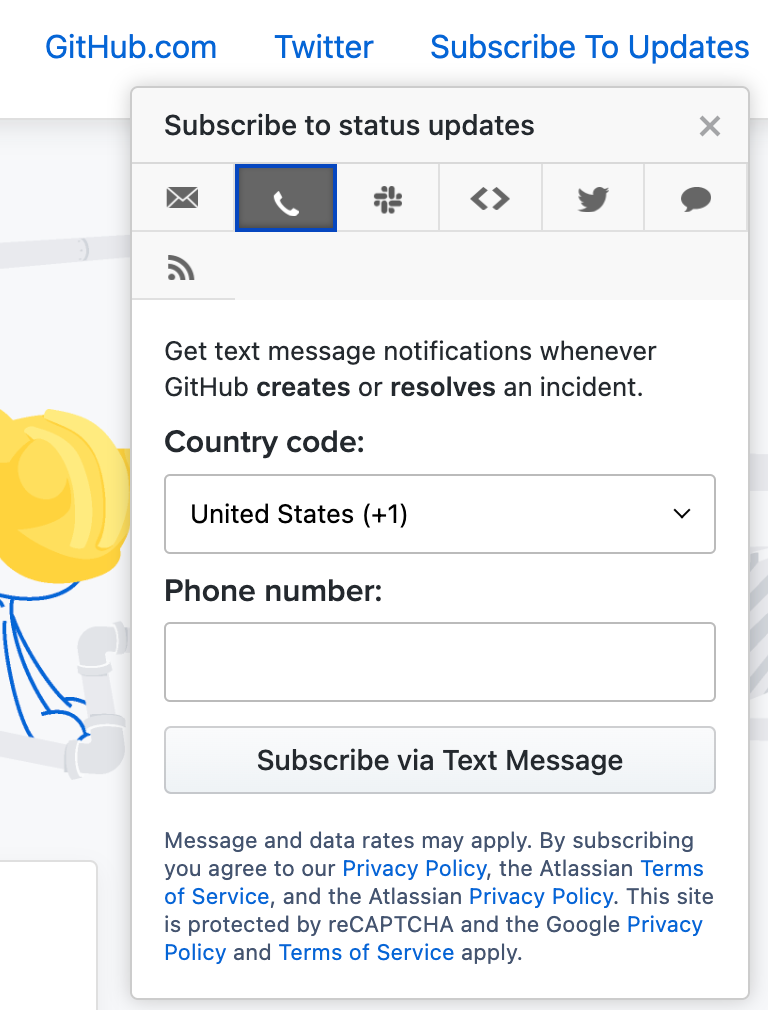

At the time I write this our status page has over 22,000 subscribers; 4,000 of whom get a text message every time we post something there. It is a blunt instrument that needs to be wielded with care. Telling 22,000 people “we have a problem!” is something we can’t take lightly or they’ll stop listening to us.

We define thresholds for impact before we declare a public incident, and there is not some nefarious reason for this like hiding incidents — it is to avoid spamming our customers with every small blip in every service. We only tell you when there are incidents that start to have broad reaching impact.

Does a problem with a single customer warrant a post to our status page? What about a problem impacting a feature that “makes things look weird” but doesn’t break anything? There is nuance in how we decide on posting.

We missed something.

I’d like to think we’ve made a lot of improvements in how we measure customer impact on the various endpoints at GitHub since I’ve been here but that doesn’t mean we don’t have blind spots. Sometimes, our measurements are flawed and we don’t realize something is broken (usually at that point we get customer reports). Nowadays these cases are pretty rare, but they still happen.

I can at least say, with the confidence of someone who has analyzed hundreds of incidents at GitHub over the past few years, that we are much better at detecting incidents these days and it’s rare when we don’t know something is wrong. I can also say with high confidence that when we don’t detect an incident, it is one of the first things we call out as a fix in the incident review.

We don’t use automation to update our public status.

Right now humans update our status page, not machines, and humans are slower to do things than their robot counterparts.

When we’re talking about 22,000 people I think that it’s important to provide information with updates and having automatic outages posted without detailed information usually confuses customers who typically want to know:

What is the actual problem and am I impacted by it?

When are you going to fix it?

Neither of these things is easy to automate and that means we can sometimes be late to the party when we status.

Why do you status so often?

On the other hand, I also get questions about why we status so often. Is our availability that bad? Why are there all of these incidents? Do these count against the SLA?

Some of our customers are large enterprises who rely on us every day and they have people who watch our status page and hold us accountable when we have incidents.

I wouldn’t want it any other way (it is nice to work for a company where we have a large amount of impact on the day to day of so many developers) but it does lead to questions.

Here are some reasons why we status more often than you might think.

We maintain higher standards for statusing than our availability SLA.

Public Status does not equal SLA. This is important for a number of reasons:

I consider the SLA to be the absolute minimum bar we need to meet in order not to hand our customers money. This isn’t the bar we’re actually trying to meet for our product, it is the absolute minimum.

We should do better than letting people know when we are hitting the absolute minimum bar for availability. If we start to detect a problem we should let people know so they can react accordingly.

The SLA doesn’t cover a number of products and features (there are 8 broad categories listed in that document and 10 on our status page). Even if we don’t cover it in our SLA, we should still let customers know if it stops working.

Not every customer is impacted by every outage.

Sometimes it can get lost that when we post to our status page we are not telling every single customer they will encounter a problem. We are telling them it’s more likely.

There are often incidents that do not impact certain classes of customers at all, or impact them only slightly. If you measure the raw “downtime minutes” of our status page it will give you an inaccurate impression of the actual downtime of a single customer.

Still, we don’t want to be beholden to transparency calculus and it’s important to separate our status page from our SLA, even if it makes us “look worse”.

We have hundreds of features.

As it turns out, we have a lot of things that can break. There are already 10 different buckets of “stuff” on our status page and that doesn’t even include email, dependabot, etc, etc.

We have a way to declare an incident without attaching it to a specific service which means I expect some of these features to break periodically and end up on the status page. It’s the nature of maintaining so many different features and products.

We can still do better.

The reality is that there are still plenty of places where we can do better with our availability (and we are working diligently in those areas).

Ultimately, nobody cares about the things we are trying to fix — they want GitHub to be reliable and available all the time — and our job is to get the complex system we steward to the point where things that break have so little impact as to not be noticed by our end users.

How to deal with statusing as a customer.

If you’re a customer it can feel pretty bad to see a service you rely on status. There are a few things you can do that might help you navigate the status page waters:

Always file support tickets when you encounter an outage, whether it has been reported on the status page or not. This might be intuitive, but sometimes customers assume that because we reported an outage they should not file a ticket. There are some good reasons to file support tickets: (1) we read them and compare them to outages so they will help make sure we can verify our own metrics, (2) we care about your feedback and it gets back to the engineering teams, (3) if you do encounter a time when we haven’t statused yet it is good to make sure we know.

Recognize that statusing doesn’t automatically mean impact to your day to day workflow (sometimes it does, but you should verify). All outages are not equal and it’s important to verify how you are impacted, rather than only rely on the status page updates.

Consider your backup plan for business critical operations. We publish an SLA to advertise our availability but some customers have higher demands than the published numbers. If you’re in that segment then you need to have a backup plan for an outage. At the least, you need to understand your dependencies and what your break glass scenarios are.

Conclusion

It’s important to remember there are humans at both ends of the status process and we are all (hopefully) trying our best to keep our software running as best we can. While some of the trappings of SLAs and impact can get tricky, it’s important to be as transparent as possible when statusing, while still respecting the giant megaphone that it represents.